Ricky Gonzalez

Hello, I am Ricky Gonzalez!

I am a PhD Candidate in the Information Science Department at Cornell University, Cornell Tech. I am a Human-AI Interaction researcher.

In this website you will find many things: My previous work done

before joining the PhD, my current research projects, and

other various milestones.

My research involves

designing intelligent interactive systems focusing on empowering

people with disabilities. As of today, I have worked together with

Blind and Low Vision users to improve the accessibility of

3D virtual environments, Data Analysis, AI powered systems, and

mobile cameras. Furthermore, my colleagues and I have come up

with novel ways of interacting in Virtual Reality, and 3D

printed models using Augmented Reality.

Research Highlights

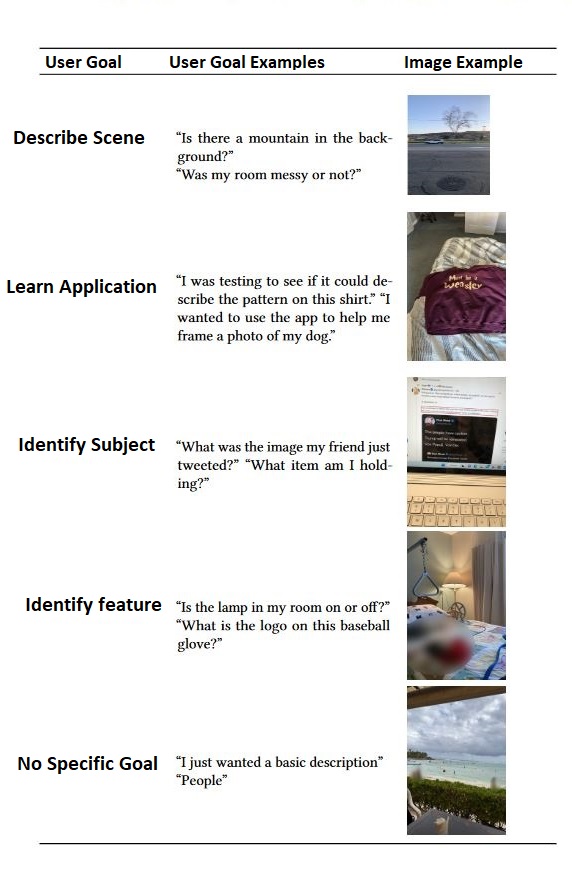

AI has immense potential to support Blind and Low Vision people access visual information. In fact, SeeingAI and similar applications have been around for almost a decade generating visual descriptions on the fly. Anecdotally, these apps had a huge impact on the independence of Blind and Low Vision people. But... is this truly the case? In this paper we present a thorough analysis of hundreds of user interactions with a scene description application to explain: Why do Blind and Low Vision people use applications like SeeingAI? How useful are they?

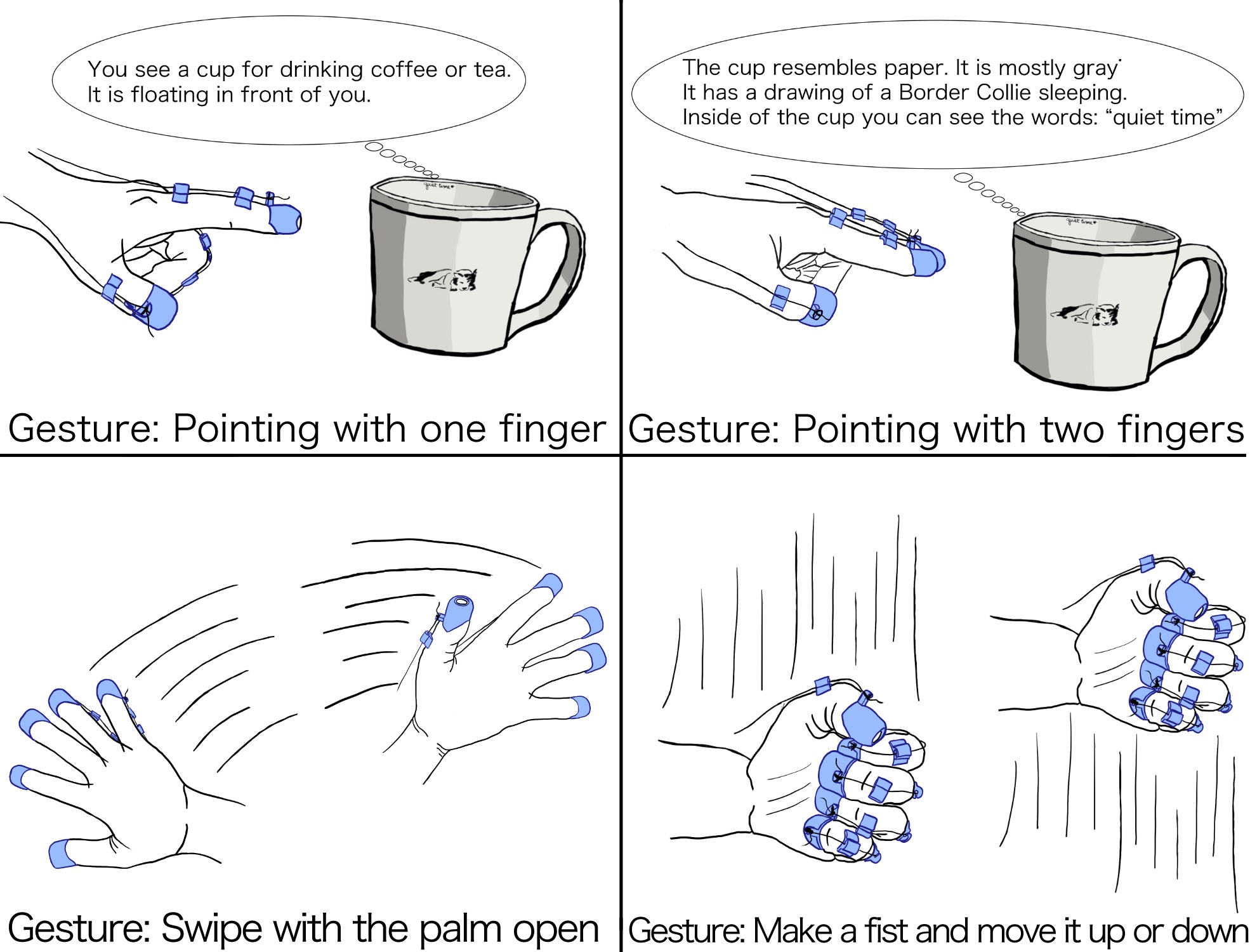

We created a series of hand gestures that can be use by people with visual impairments to trigger descriptions interactively in a VR environment. These gestures allow people with visual impairments obtain information about the environment, and also dynamically control the speech rate of the voice reader.

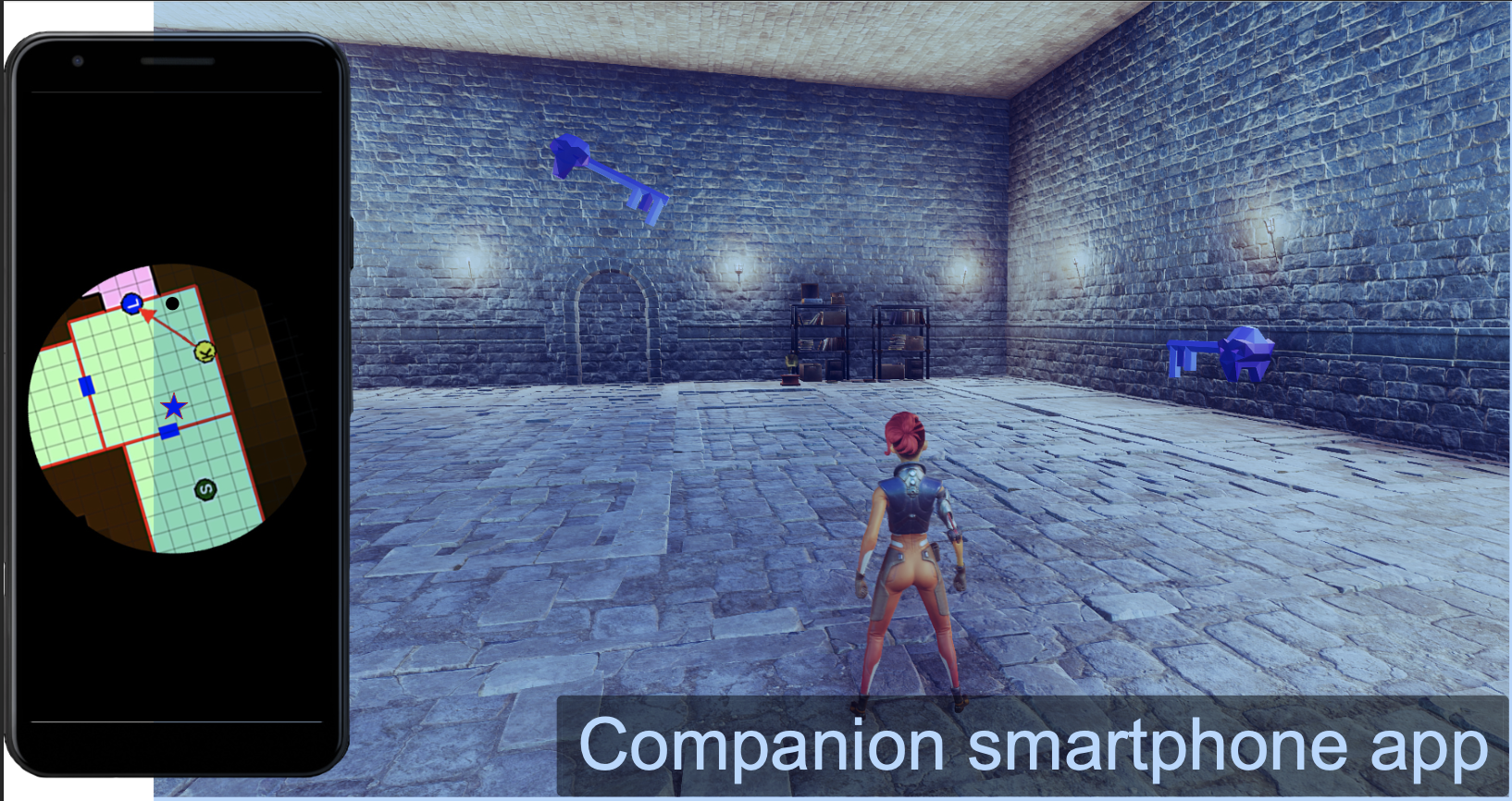

We implemented four leading approaches to facilitating spatial awareness for gamers with visual impairments and investigate their merits and limitations. The four tools are: a smartphone map, a whole-room shockwave, a directional scanner, and a simple audio menu of points-of-interest. We evaluated how important gamers consider six different types of spatial awareness — that have been found to be important in physical world settings. Our findings reveal important design implications for future spatial awareness tools

Recent Activity

Internship!

«May 20, 2024»

Internship!

«May 20, 2024»

«I received a return offer from JPMorganChase to join the Global Technologies Applied Research Team (GTAR) XR team again. We are expanding the functionalities and use case scenarios of the prototype we developed last summer»

Accepted!

«May 12, 2024»

Accepted!

«May 12, 2024»

«Our full-paper investigating how Blind and Low Vision People use scene description applications was accepted to CHI'24»

Submitted!

«Apr 15, 2024»

Submitted!

«Apr 15, 2024»

«We submitted a paper to ASSETS'24 where we present designs for accessible forms of nonverbal cues in VR for Blind and Low Vision people»

Submitted!

«Sep 15, 2023»

Submitted!

«Sep 15, 2023»

«We submitted a paper to CHI'24 where we investigate how Blind and Low Vision people use applications like SeeingAI»

Patent!

«Aug 23, 2023»

Patent!

«Aug 23, 2023»

«During my internship in the GTAR XR team at JPMorganChase we submitted a patent on an accessible interactive technique for data analysis»

Internship!

«Jun 03, 2023»

Internship!

«Jun 03, 2023»

«I joined the JPMorganChase Global Technologies Applied Research Team (GTAR) XR team, lead by Blair MacIntyre. I worked on accessible immersive data analytics for Blind and Low Vision People»