Talkit++

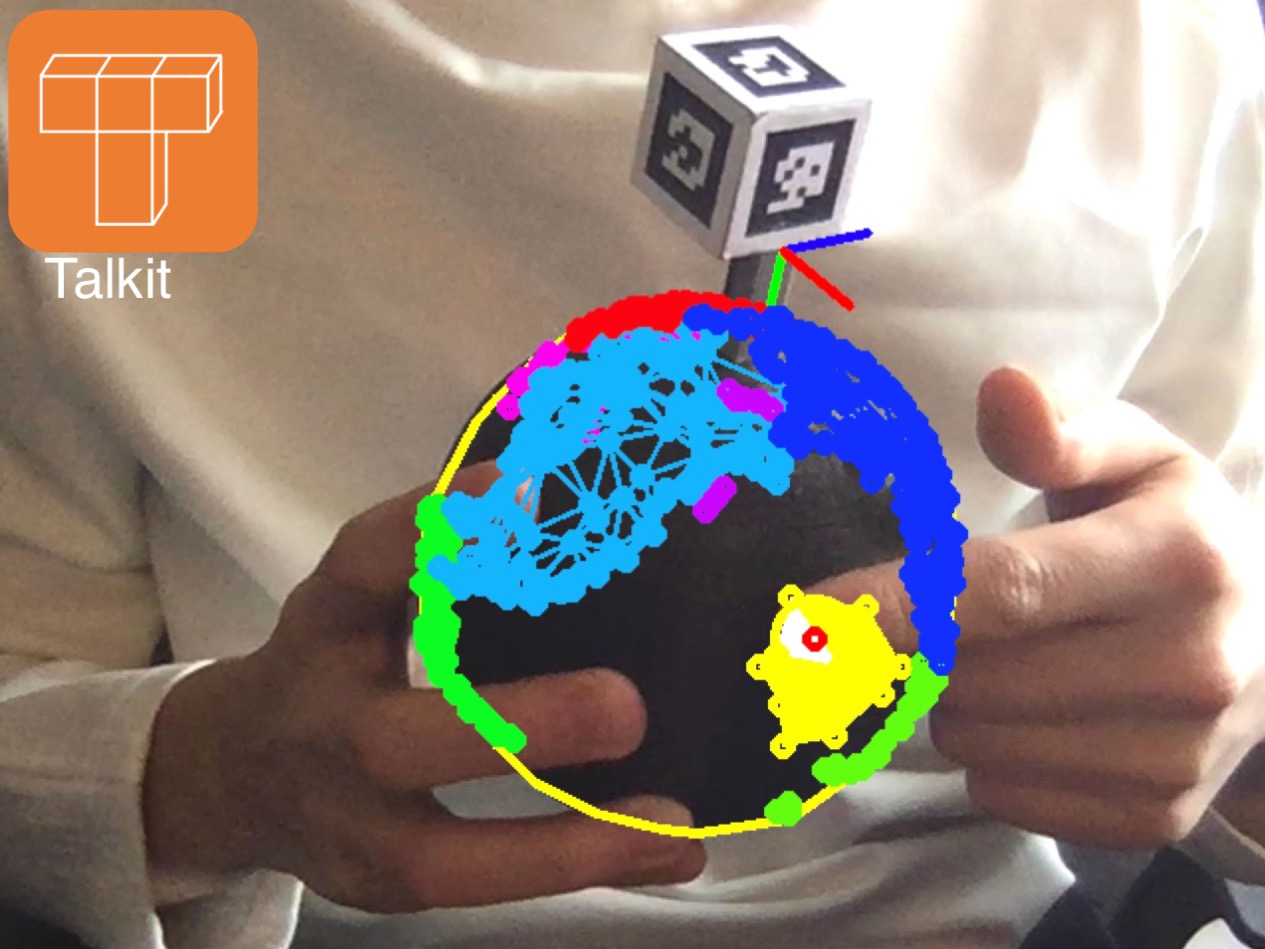

Talkit++ is an iOS application that allows a blind user to interact with an augmented 3D printed model. To begin exploring a model, a blind user sticks a sticker on her fingernail, and slides the tracker on the printed scaffold. Using a RGB camera, Talkit locates the model by sensing the tracker, and finds the user’s finger by tracking the sticker. Talkit++ speaks audio information when the user puts the finger with the sticker on an annotated element on the model.

Abstract

Tactile models are important learning materials for visually impaired students. With the adoption of 3D printing technologies, visually impaired students and teachers will have more access to 3D printed tactile models.

We designed Talkit++, an iOS application that plays audio and visual content as a user touches parts of a 3D print. With Talkit++, a visually impaired student can explore a printed model tactilely, and use finger gestures and speech commands to get more information about certain elements in the model.

Talkit++ detects the model and finger gestures using computer vision algorithms, simple accessories like paper stickers and printable trackers, and the built-in RGB camera on an iOS device. Based on the model’s position and the user’s input, Talkit++ speaks textual information, plays audio recordings, and displays visual animations.

Demo

Related papers & publications

Presented on ASSETS’17, Markit and Talkit, a Low-Barrier toolkit to augment 3D printed models