Hands-On: Using Gestures to Control Descriptions of a Virtual Environment for People with Visual Impairments

Demo

Authors: Ricardo E. Gonzalez Penuela, Wren Poremba, Christina Trice, and Shiri Azenkot.

Short Summary of Work

Virtual reality (VR) uses three main senses to relay information: sight, sound, and touch. People with visual impairments (PVI) rely primarily on sound and haptic feedback (touch) to receive information about VR environments. While researchers have explored several approaches to make navigation and perception of objects more accessible in VR, none of them offer a natural way to request descriptions of objects, nor control of the flow of auditory information.

To address this gap, we designed a set of hand gestures that PVI can perform to request descriptions about the environment.

Hand Gestures

We designed a VR haptic glove based on the open-source project LucidVR [10]. These gloves are capable of tracking the user’s finger curling, detecting hand movements in VR, and providing FFB through the user’s fingers.

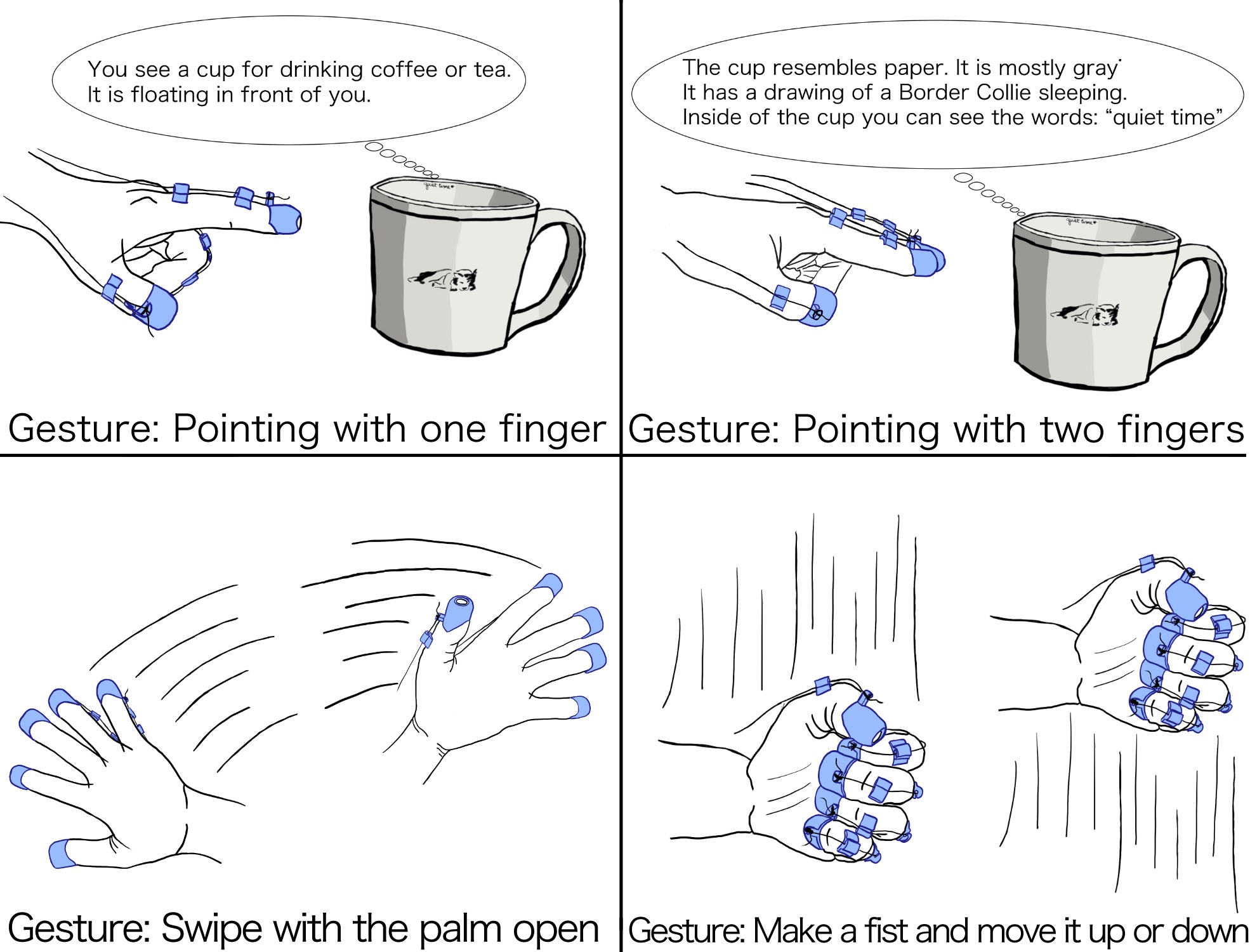

Informed by the gloves capabilities and previous work (e.g., See- ingVR, VizWiz, Seeing AI, OrCam) [ 1– 3, 20 ], we designed a series of hand gestures that can be performed to trigger the descriptions interactively. These gestures allow the user to obtain information about the environment, and also dynamically control the speech rate of the voice reader.

- Point with one finger (e.g., index finger) towards an object to trigger a general description of the object (e.g. You see a cup for drinking coffee or tea. It is floating in front of you).

- Point with two adjacent fingers (e.g., index finger, and the middle finger) towards an object to trigger a more detailed description of the object (e.g. The cup is mostly gray. It has a drawing of a Border Collie sleeping. Inside of the cup you can see the words: “quiet time”).

- After pointing towards an object, flick to the right or to the left to trigger a description of a nearby object in the direction that was waved (e.g., right, left).

- Perform a wide wave from left to right to trigger the voice reader to read the descriptions of the objects in the view.

- Make a fist and move it up or down to raise or lower the speed of the speech of verbal feedback.

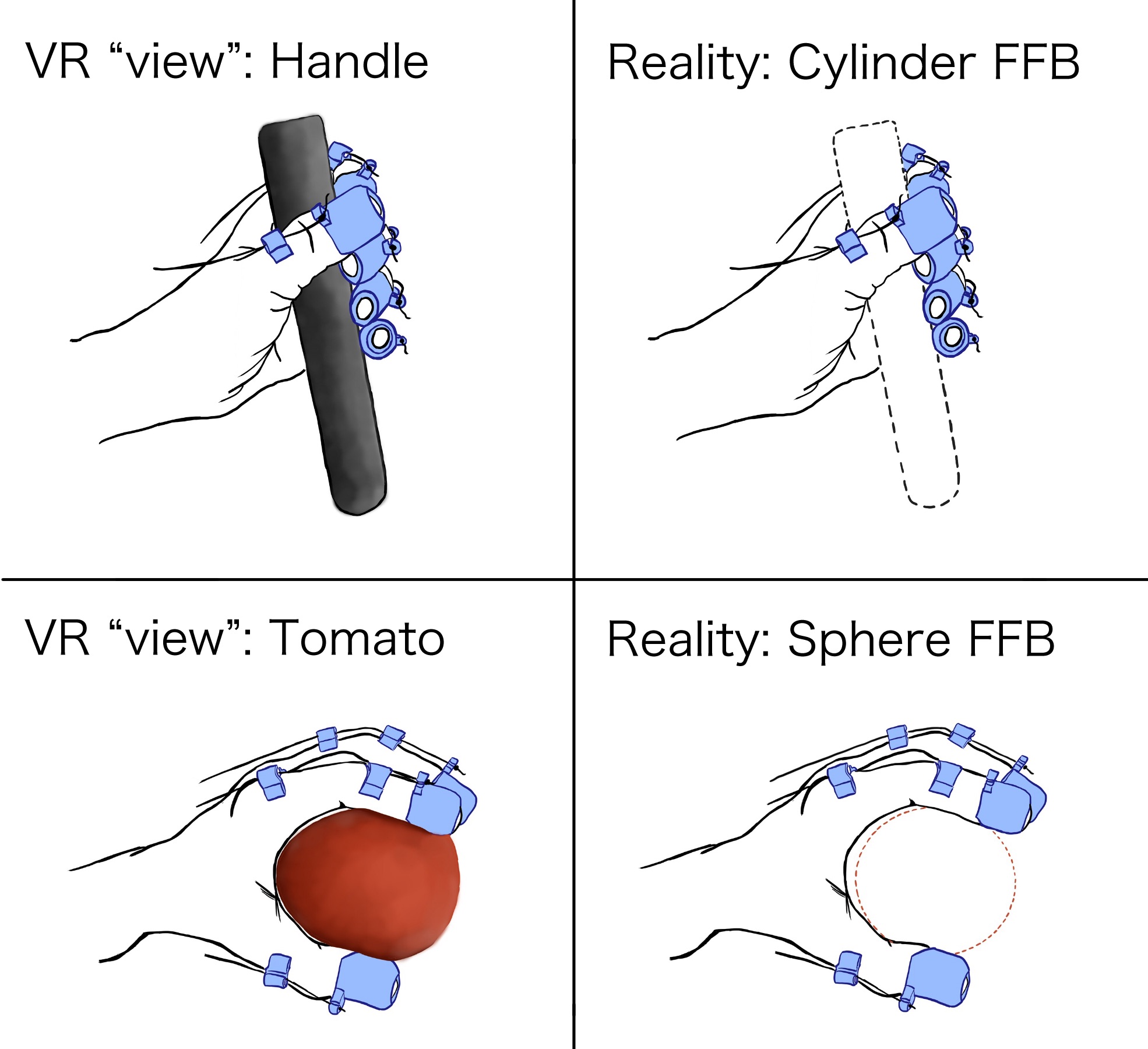

Haptics

In addition to the gestures, PVI can also perceive a simplified version of the shape of held objects (e.g., Cylinders, spheres, rectangular geometric figures). The gloves combine the use of retractable strings and servo motors that limit the range of motion of the spool with a horn, restricting the user’s finger curling to perceive the virtual object.